Global mating

means evolution proceeds more slowly

than with local mating.

The whole population must advance at the same pace.

Whereas with local mating,

little groups can form and intensively explore some specialised avenue.

If successful, they then come back and "invade" the rest of the

population and wipe them out (either literally,

or just by competition).

Some experiments raise and lower geographical barriers in an attempt to encourage periodic formation of species / subspecies.

Diversion - Speciation

In nature, 2 species can gradually separate from a common ancestral species. Indeed, nothing interesting would exist if this did not happen.

A "species" is defined as a group of creatures that can interbreed with each other and produce fertile offspring.

Speciation cannot happen if, as in most machine evolution experiments, fit individuals breed randomly with other fit individuals across the entire world population. Then the whole population progresses together. There has to be some concept of mating with what is nearby.

e.g. An island gets cut off from mainland. The woodpeckers on the island breed only with others on the island. Gradually their gene pool starts drifting in a different direction to those on the mainland. 5 million years later, sea level goes down and the island is joined again. But now the 2 woodpecker groups cannot breed with each other - 2 species.

The species problem

Turns out to be very hard to define "species".

- The definition of being able to interbreed only works with sexual reproduction. How do you define species for life that uses asexual reproduction?

- Ring species - not all individuals can interbreed.

- The species problem - the problem of defining a species.

"Species" do not exist over time

The concept of species also breaks down if you look at the population over time.

"Species" do not really exist as you travel back and forth through time. There is no such thing as the "start" of a species. Classifying extinct creatures into species runs the risk of distorting history. e.g. How do we know that what we call early H. sapiens could breed with us, if they were alive today? How do we know they couldn't breed with what we call late H. erectus?

- Chronospecies - Species that changes slowly over time so that the earlier members probably could not breed with the later members if they were ever brought together.

"Species" can only be defined relative to a particular point in time. As you travel back through the ancestors of living species, the definition of the species soon gets confused and has to be revised as you go along.

Anybody who talks about "the first humans" does not understand nature. There is no such thing as "the first humans".

- Common ancestors of all humans

- Mathematical models of human mating are possible if we assume random mating (unrealistic).

- For non-random mating, we really need Computer simulations.

if x then a

where x is a condition or state of the world,

and a is the action we will take in that state.

Many rules may fire.

Must resolve conflict and choose a rule to follow.

Conflict is resolved based largely on the "fitness" of the rule.

"Bucket brigade algorithm":

Further Reading - John Holland, "Escaping Brittleness",

in Michalski et al,

Machine Learning: an Artificial Intelligence approach, Vol.2, 1986,

Library 006.31.MIC.

See also Some discussion in my PhD thesis.

Can also view it as pattern matching:

if x then c

or:

x -> c

where x is the multi-dimensional input pattern and c is the class it belongs to. Then we use a form of supervised learning, where the teacher is able to return what category x should have been classified in, and the strengths (increased/decreased) and population (strong rules reproduce, weak die) of the rules adjust in response to this feedback.

If x = (x1,..,xn), rules may be things like:

(1,7,3,5,5) -> 0 (1,1,8,5,5) -> 0 (1,7,9,3,5) -> 0 (2,3,5,5,5) -> 1

but lot more powerful if we can include wildcards, so rules are of the form:

(1,*,*,*,5) -> 0 (2,3,5,*,*) -> 1

Now generalisations can evolve.

Questions - Does the generalisation cover the whole space?

Can a point belong to multiple classes?

Evolving not just bit strings or similar but actual program code.

Structure the program in a hierarchy. e.g. The expression ( x + (y*3) ):

+ / \ x * / \ y 3Conditional expression ( if x < y return 3 else return 4 ):

IFLTE / / \ \ x y 3 4Can see how can build up complex expressions. IFLTE is basically a function that controls its own interpretation of parameters below it. We can define our own functions.

For program flow of control, LISP lends itself to hierarchical structuring like this, and is the most common language used. ML would probably be good too (though I am not aware of any GP in ML). Other languages more difficult, but they too (Pascal, C, even C++) have been evolved.

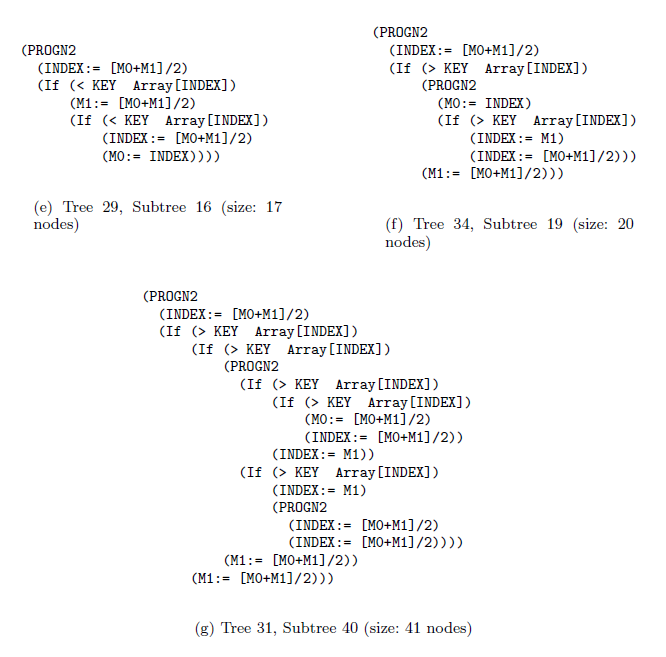

Mutation - Pick a subtree. Replace with new random subtree.

Crossover - swap subtrees. Note that, unlike the case with GAs, the 2 trees crossed over can have different topologies to each other. (Crossover is at different point in each.) [Note: Must allow different topology to evolve, otherwise programs are very restricted.]

One of the features of Sims' work is that he used co-evolution. Many researchers have found that if the survival problem is too hard at the start, evolution cannot work. Everything has fitness zero and so, if we pick the best to reproduce, we are just picking random individuals. What reproduces is random, and remains random. Nothing evolves.

Even at the start, we need to be able to distinguish the absolute worst from the best of the worst. But if we make survival too easy, then, as the creatures get more competent, they all rapidly attain maximum fitness and we have the same problem of distinguishing them.

One solution is that survival should be easier at the start, and get harder as the creatures get more competent. How can we ensure that the fitness function changes slowly like this? By tying it to the evolution of other creatures. As the other creatures around it improve, the survival problem gets harder, and the creature's population is subject to constant evolutionary pressure.

This has also been found in machine learning for games. If the (initially-random) machine plays a human, it simply loses massively and learns little or nothing. All moves (or all individuals) have fitness zero and are indistinguishable. If it plays itself, however (or rather, another member of the population close to itself), then the least-useless one will win, and slowly, gradually, the learning gets off the ground.

"Artificial Life" has different meanings, depending on who you talk to, just like "Artificial Intelligence".

"Artificial Intelligence" should mean the field of autonomous machines - machine search, learning, evolution, and all forms of self-modification. But what it has come to mean is often "machines doing what would be seen in a human as intelligent", i.e. a more narrow focus on the rare and occasional symbolic, logical and linguistic reasoning done by the sane, adult, literate H. sapiens.

If that is the meaning of AI, then there is obviously an enormous field not covered by that name, and so this is what "Artificial Life" should cover.

Yet if you go to Artificial Life conferences, you find the field

is almost entirely devoted to studying different types

of evolution.

See Battling with GA-Joe

by Stephen Grand.

Currently the terminology seems to be:

The ALife approach to evolution is more radical than the GA one. ALife researchers tend not to have an explicit fitness function solving some problem, but rather set up an environment with some dynamics, and see what emerges. There has been some success in modelling economies, ecologies, and other complex systems this way.