Files

Contiguous file allocation

Files all in one unbroken sequence on the physical disk.

Problems like with contiguous memory allocation.

What happens if file grows?

Has to be rewritten into larger slot.

Takes ages.

-

Many files grow slowly, e.g. text editor files.

-

But some files grow quickly, unpredictably, or without limit.

e.g.

HTTP access log,

or zip

or tar

archive file:

tar -cf file.tar $HOME

Grows from 0 k to 10 G in a minute or two.

Like with paging in memory,

disk is divided into blocks.

- Blocks are

of fixed size, say 1 k or 4 k.

- On Linux there are ways to query block size of different devices at command line.

- On DCU Linux, may not have permission. So here is a quick alternative way:

Create new file. Will get one block allocated for it:

$ echo 1 > newfile

See how much space allocated for it (one block).

$ du -h newfile

4.0K

File is held in a collection of blocks scattered over the disk.

If file needs more blocks,

it can take them from anywhere on disk

that blocks are free.

Index of where the blocks of the file are

Like pages in memory, blocks can "flow" like liquid into slots around the disk.

Don't all need to be in the same place.

Need some index of where the bits of the file are.

Various indexing systems

using linked lists or tables:

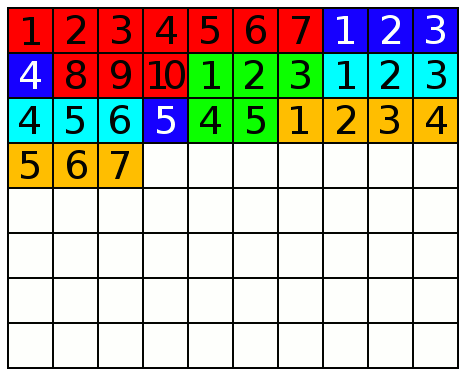

Demonstration of

fragmentation

(files split into multiple parts).

From below.

Shell script to see blocks allocated to files

# compare actual file size with blocks used

for i in *

do

ls -ld $i

du -h $i

done

|

Results like (on system with 1 k blocks):

-rwxr-xr-x 1 me mygroup 857 Jun 27 2000 synch

1.0K synch

-rwxr--r-- 1 me mygroup 1202 Oct 25 2013 profile

2.0K profile

-rwxr-xr-x 1 me mygroup 1636 Oct 28 2009 yo.java

2.0K yo.java

-rwxr--r-- 1 me mygroup 2089 Oct 8 00:03 flashsince

3.0K flashsince

-rwxr-xr-x 1 me mygroup 9308 Oct 19 2010 yo.safe

10K yo.safe

|

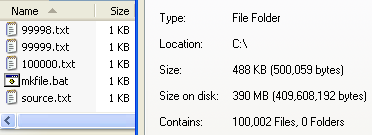

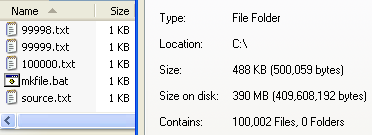

An extreme experiment to demonstrate wasted space ("slack space") in file systems.

This person makes

100,000 files of 5 bytes each.

This is only 500 k of actual data.

But it needs 400 M of disk space to store it.

From

here.

Contiguous file allocation is good where possible

Unlike in memory, where contiguous allocation is dead,

in files it has made a comeback.

The reason is that there is a lot of disk space and it is cheap,

but the speed of read/writing disk has not increased so much

- indeed it has got worse relative to CPU speed.

To write a contiguous file to disk you

don't have to jump the

disk head

around the disk.

You just keep writing after the last location you wrote

- the head is in the correct position.

So modern systems try to use contiguous allocation for small files,

only use non-contiguous for large files.

To achieve this, they may allocate more space than possibly needed.

Some wasted disk space is worth it

if it makes disk I/O faster.

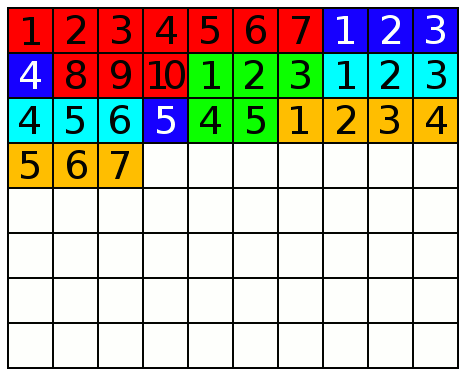

Demonstration of

fragmentation

(files split into multiple parts)

and

defragmentation

(reducing such splitting and making files contiguous).

From

here.

- Defragmentation is for HDD not SSD:

- Defragmentation can speed up a hard drive (with moving disk head).

- Not needed and not useful on solid state drive (no moving parts).

- User probably does not have to think about Defragmentation:

- Windows now automatically runs defragmentation in the background.

- Linux file system is designed to avoid fragmentation as much as possible

by allocating files far apart on the disk.

Also to speed things up:

OS caches recently-accessed blocks in memory in case needed again.

Avoid another disk I/O.

RAM drive

- RAM drive -

RAM formatted with a file system.

-

Very fast, but volatile.

- Will be cleared

after reboot.

Needs to be loaded up with files from disk (non-volatile).

- Need to write changes to files to actual disk

or they will be lost.

- Automatic software can take care of the above two.

- Battery-backup RAM drive - survive through power outage.