Memory

Basic idea is that there is such a thing as a "bad" memory access.

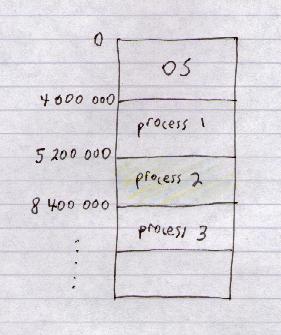

User process runs in a different memory space

to OS process (and other processes).

On multi-process system (whether single-user or multi-user)

each process has its own memory space

in which (read-only) code and (read-write) data live.

i.e. Each process has defined limits to its memory space:

Q. Even if there are no malicious people, process memory areas need to be

protected from each other - Why?

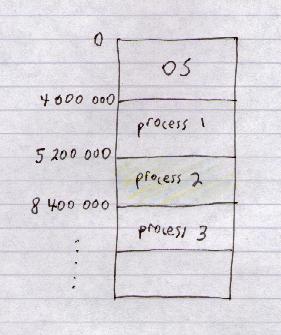

Above, each process has two

registers

- a

base register and a

limit register.

e.g. The base register for process 2 has contents 5200000.

Its limit register has contents 3200000.

And then every memory reference is checked against these limits.

A simple model would look like this:

When reference is made to memory location x:

if x resolves to between base and (base+limit)

return pointer to memory location in RAM

else

OS error interrupt

Q. Load values into base or limit registers are privileged instructions. Why?

As we shall see, in fact memory protection has evolved far beyond

this simple model.

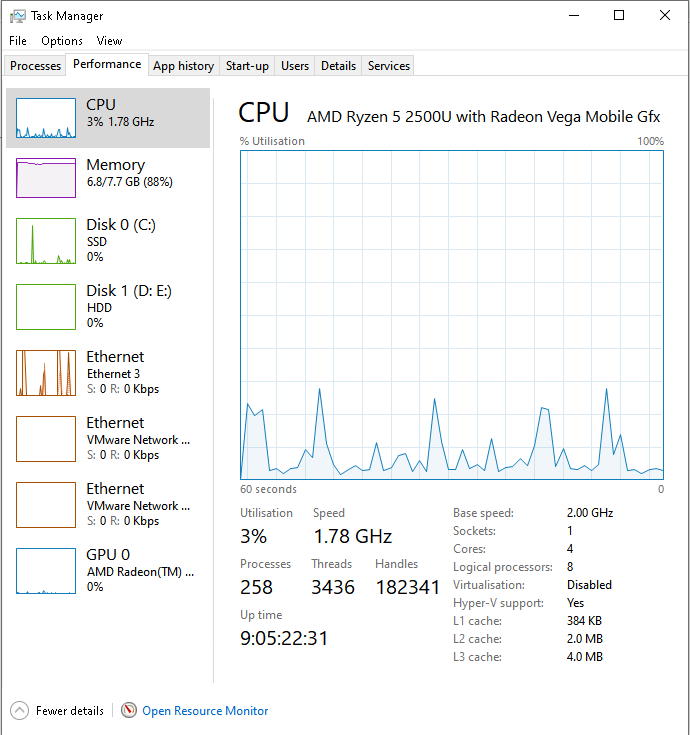

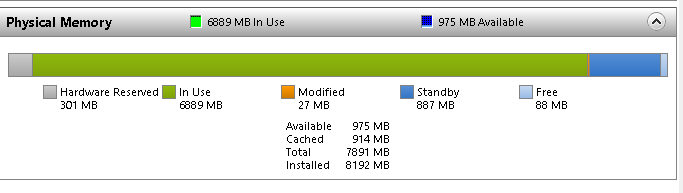

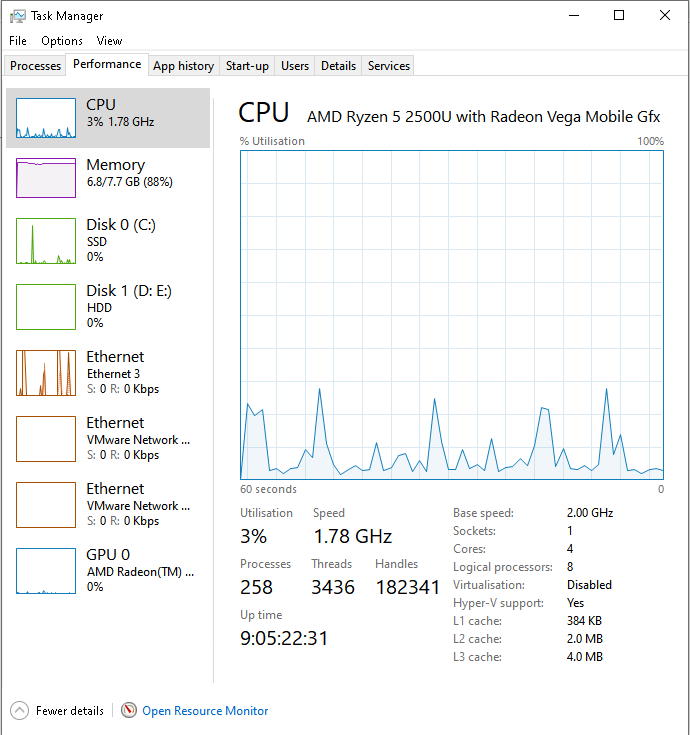

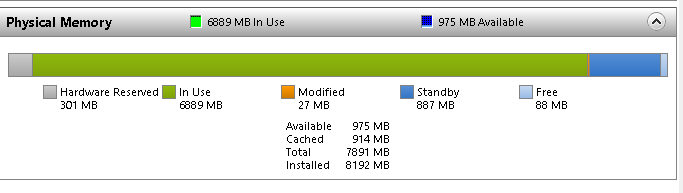

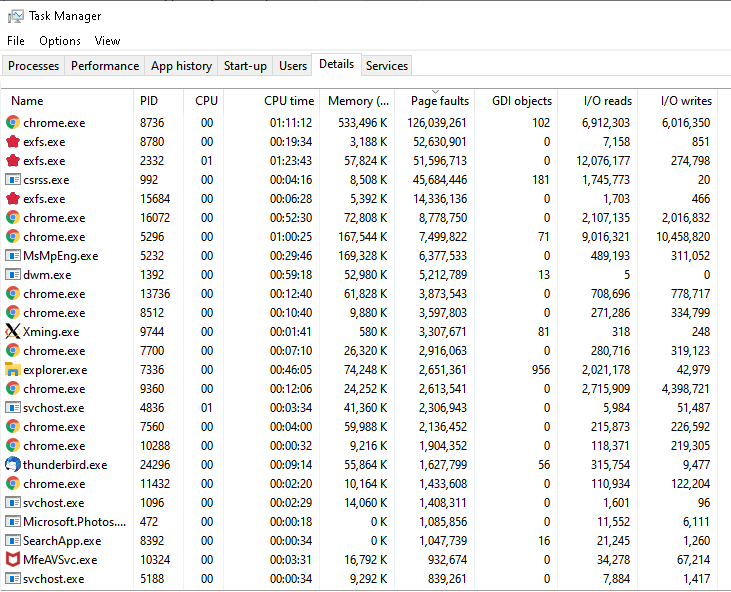

Looking at memory use on Windows.

Here Windows 10 -

Task Manager

- Performance tab.

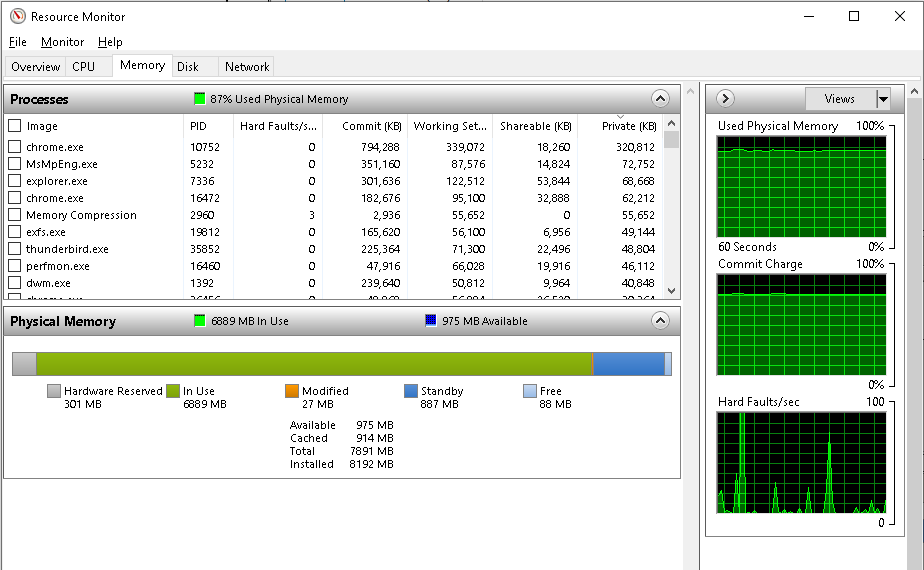

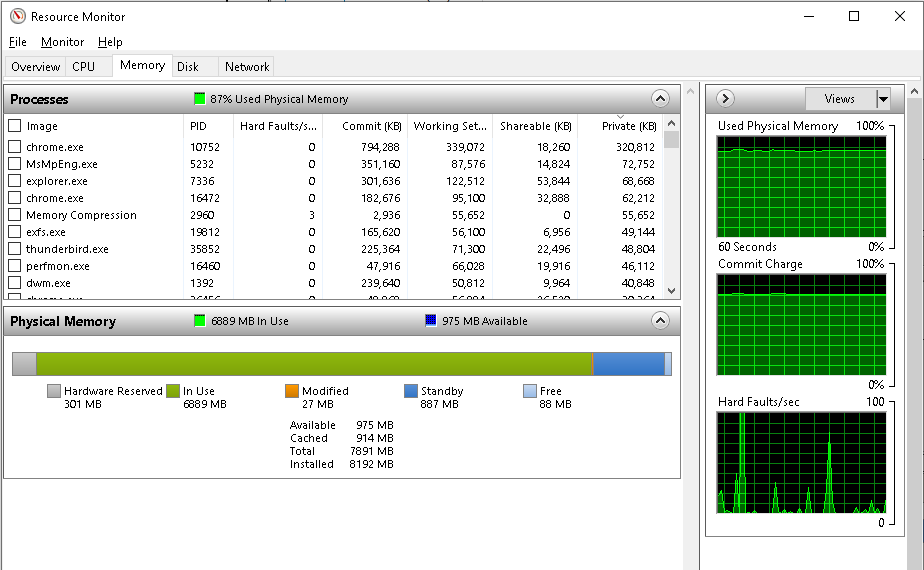

Click "Resource Monitor" in the above and you get further breakdowns. See "Memory" tab.

Sort by column.

Hover over column names to see definitions.

-

"Standby"

is really free RAM.

See explanation later.

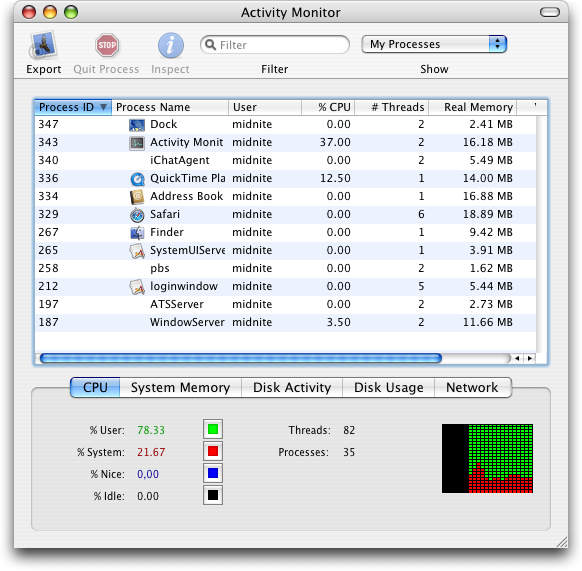

- See Processes (ps) in Linux/Unix

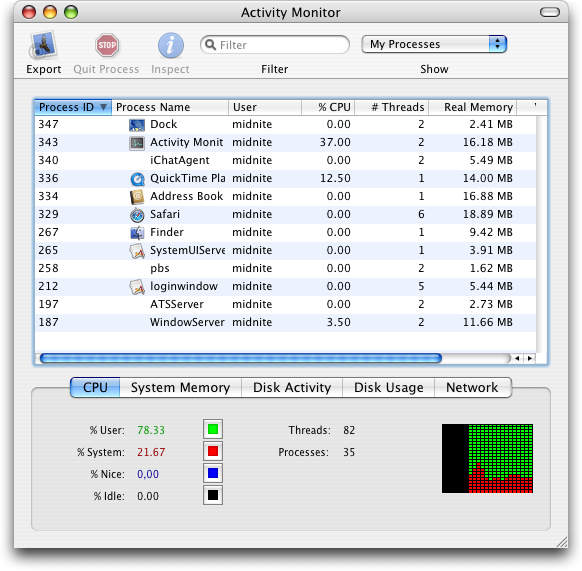

Apple OS X has

Activity Monitor.

OS X is

Unix family.

You can also use

ps

on the Apple

Terminal

(command-line).

Image from

here.

Creative Commons.

The

transformations made to the program

from the code written by a human

to instructions executed by the CPU.

- Compiled program (e.g. typical use of C++, Java):

-

Program typically written "in factory" in HLL, with comments,

English-like syntax and variable names,

macros and other aids to the programmer.

-

Program is then compiled "in factory" into an efficient,

machine-readable version, with all of the above stripped,

optimisations made, and everything at "compile-time"

translated and resolved

as much as possible, so as little as possible needs to be done when

it is run.

-

At different times, on different machines, and with

different other programs already running,

the user will "launch" or "run" a copy of this compiled program.

Any further translation that could not be done at compile-time

will now be done at this "load-time".

Then the algorithm itself actually starts to run.

-

Any change that has to be done while the algorithm has already

started to run, is a change at "run-time".

- Interpreted program (e.g. typical use of Javascript, Shell):

- No compilation step.

Source code itself is read at "load-time".

Memory mapping (or binding)

Consider what happens with the memory allocation

for a program's global variables.

Programmer defines global variable "x".

Refers to "x" throughout his High-Level Language code:

do 10 times

print(x)

x := x+7

Clearly when the program is running, some memory location

will be set aside for x and the actual machine instructions will be:

do 10 times

read contents of memory location 7604 and print them

read contents of loc 7604, add 7, store result back in loc 7604

How "x" maps to "7604" can happen in many ways:

- Source code:

The programmer maps x to 7604 in the source code.

- Compile-time:

If x is mapped to 7604 at this point (or before)

then the program can only run in

a certain memory location. (e.g. DOS - single user, single process)

- Load-time:

The compiler might map x to "start of program + 604".

Then when the program is launched,

the OS examines memory, decides to run the program in a space

starting at location 7000,

resolves all the addresses to absolute numerical addresses, and starts running.

- Run-time: Even after the program has started, we may change the

mapping (move the program, or even just bits of it,

in memory), so that next time round the loop it is:

read contents of memory location 510 and print them

read contents of loc 510, add 7, store result back in loc 510

Obviously, OS has to manage this!

User can't. Programmer can't.

Even Compiler designer can't.

Questions

Question - Why is it not practical for the OS to say to a user

on a single-user system:

"I'm sorry, another program is occupying memory space

7000-8000. Please terminate the other program

before running your program"

Question - Why is it not practical for the OS to say to a user

on a multi-user system:

"I'm sorry, another program is occupying memory space

7000-8000. Please wait until the other program terminates

before running your program"

Question - Why can't program writers simply agree

on how to divide up the space?

Some programs will take memory locations 7000-8000.

Other programs will take locations 8000-9000, etc.

Question - The user's program has started.

The OS needs to change what memory locations things map to,

but binding is done at load-time.

Why is it not practical for the OS to say to the user:

"Please reload your program

so I can re-do the binding"

Question - Why is it not practical to say to a user:

"Please recompile your program"

Partial load of programs (desired)

Consider:

Does the

whole program have to be loaded into memory when it is run?

Many applications contain vast amounts of functionality that is rarely or never used.

e.g. Run web browser

for years.

Never once click on some menu options.

But was the code behind those menu options loaded into memory every single time, for years?

How can we load only part of a program?

Many applications contain functionality that is rarely or never used.

Pre-load programs and keep old ones in memory (desired)

In apparent contrast to only loading part of a program into RAM

before starting execution

is the following

idea,

where we pre-load things (and keep old things) in memory

that we may not even need.

Above we saw this typical memory map of a Windows 10 system.

We saw there is not much free memory, but I said that

"Standby"

is really free memory.

I will now explain this.

- In the "Standby" section, the OS has filled RAM with stuff we may not even need:

- It keeps old code and data that may be needed again.

- It may pre-load commonly used programs into memory in case they are run.

- See

Windows

Prefetch

and

SuperFetch

algorithms.

- Info on recently-run programs stored in

.PF files

in something like C:\Windows\Prefetch

- This is a good idea. First, this RAM is actually still free for use if needed. It is not being reserved.

When more RAM needed, it can be taken from here.

- Second, pre-loads can be done when the system is not busy

and need not detract from the user experience.

Keeping old data in RAM of course costs nothing.

- Third,

if the old data is needed again, or if those common programs are run, they are already in RAM

and will run straight away without reading from disk.

If they are not needed, there is no problem.

- Why fill RAM with stuff we may not need?

For speed.

This is the

"unused RAM is wasted RAM"

idea.

Memory management

Processes are large. Take up a lot of memory.

Imagine if this has to be

contiguous

-

all in one unbroken chunk

from memory location A to location A+n.

End up with a memory map like this:

| Start address |

End address |

Process |

| 0 |

1000 |

OS |

| 1000 |

1200 |

free |

| 1200 |

2000 |

Process 11 |

| 2000 |

2100 |

Process 12 |

| 2100 |

2400 |

Process 13 |

| 2400 |

3300 |

free |

| 3300 |

3800 |

Process 3 |

| 3800 |

4500 |

free |

Problems with

contiguous memory spaces:

- Programs starting and ending all the time.

End up all over memory.

Will have gaps everywhere.

- When load program, have to find contiguous space big enough to hold it.

- "Holes" develop in memory.

Lots of memory free but not contiguous

so can't be used.

- When process asks for more memory at run-time, might not be any free above it.

(See figure above.)

- How do we load only part of a process

as opposed to whole thing?

How do we swap out only part of a process

as opposed to whole thing?

We need a mechanism for identifying parts of a process.

- If you load only part of the program at launch,

you still must reserve all possible space ever needed above it.

The modern OS does

Paging.

Non-contiguous memory spaces.

Program broken (automatically by OS)

into lots of small chunks

that flow like water into every crack and gap in memory,

and out to disk (swapping) and back in.

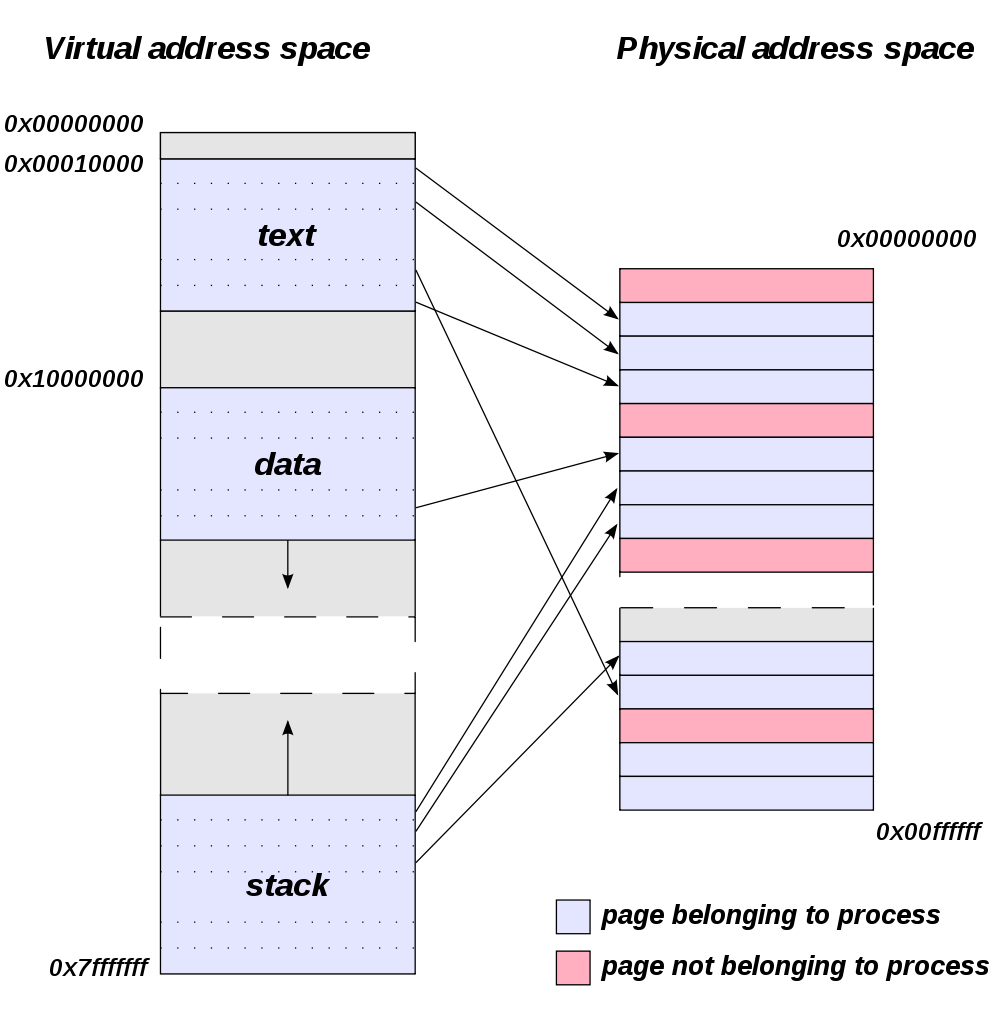

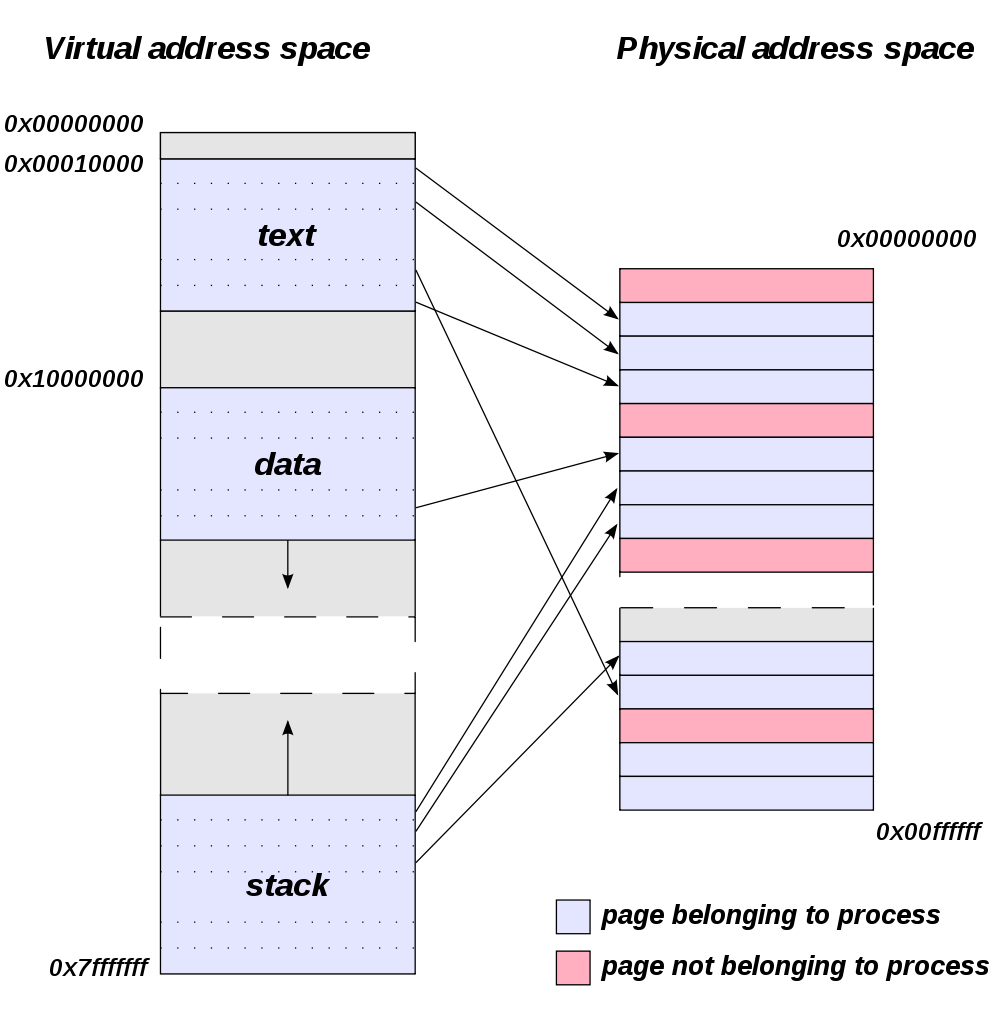

Memory mapping with paging

-

Program

logical (or virtual) memory space

is

divided into

pages.

- Pages are

of fixed size, say 4 K.

- On Linux can query page size at command line with

getconf:

$ getconf PAGESIZE

4096

- Some architectures support multiple page sizes (pages, large pages, huge pages).

-

A program references a memory location

in its virtual address space

as an offset within a particular page.

-

Physical memory

is divided into frames,

of same size as pages,

so that pages can be loaded into the frames.

-

Program loaded into memory. Each page is loaded

into a frame.

Crucially, the frame can be anywhere in memory.

Any free frame will do.

-

Page table

shows where the program is.

Paging means the program can be physically located all over memory.

From

here.

Example

e.g. Program has logical memory space:

page 0, .., page 3.

Physical memory frame 0, .., frame 7.

Page table:

page -> frame

0 -> 1

1 -> 4

2 -> 3

3 -> 7

Don't have to be contiguous, don't even have to be in order.

Page 0, offset 2, maps to: Frame 1, offset 2,

Page 3, offset n, maps to: Frame 7, offset n,

etc.

Paging advantages

Paging advantages:

- Holes and fragmented memory are no problem.

- When load program, do not need to search for contiguous space,

only enough memory in different locations.

-

If a bit of memory is available anywhere, program can use it.

- Can easily ask for more memory, in any location.

- Can easily load only parts of program.

- Can easily swap only parts of process to disk.

- Makes maximal use of memory.

Indeed,

we would find modern machines almost unusable without paging.

-

Separation of process memory from other processes.

Process cannot address memory it doesn't own.

Has no language to even describe such memory.

Paging disadvantages:

-

Overhead - have to resolve addresses at run-time.

Swapping pages out to disk:

Program addresses a logical space.

This logical space maps to pages scattered all over memory and on disk.

Much of program can be left on disk if not needed.

OS hides this from user and programmer.

Only need: Active processes in memory.

In fact, only need: Active bits of active processes in memory.

OS decides what bits of programs stay in memory

at any given time.

If page not in memory it is not an error, OS just fetches it.

This is called a

Page fault.

- Not really: Disk as extra memory.

Disk I/O is slow.

So using disk just as "extra memory" is not really the idea.

Yes it is extra memory, but program runs much slower if too much disk I/O.

Recall Memory hierarchy.

-

More like: Disk as overflow of memory.

What we really use disk for is as an overflow/buffer/cache of memory.

Use it so we don't have to worry about the exact size of memory

- worry that launching the next program will cause OS to crash.

Instead the system slows down gracefully as we run more progs.

(More page faults, more disk I/O, system slows, user closes some programs.)

Without using disk as overflow,

when we run out of memory there will be sudden and catastrophic crash of the system.

Major and minor page faults

Minor (soft) page fault - Page is actually in RAM though was not marked as part of the "working set" for this process.

e.g. Page was pre-fetched in case needed.

e.g. Page was brought into RAM for another process.

e.g. Old page marked free but not overwritten.

Can resolve minor page fault without disk read.

Major (hard) page fault - Need a disk read. Takes much longer to return.

Viewing page faults

On Linux we can look at major and minor page faults using

ps:

# show min_flt

# and maj_flt

$ ps -A -o user,pid,ppid,min_flt,maj_flt,comm,args

# sorted descending by number of minor page faults:

$ ps -A -o user,pid,ppid,min_flt,maj_flt,comm,args | sort -nr -k 4

USER PID PPID MINFL MAJFL COMMAND COMMAND

root 1198 1 20509703 0 xe-daemon /bin/bash /usr/sbin/xe-daemon

root 2920 1 10267099 0 sshd /usr/sbin/sshd

root 3172 1 6000382 1 xinetd /usr/sbin/xinetd

root 11034 1 1176904 0 fail2ban-server /usr/bin/python /usr/bin/fail2ban-server

root 5293 1 975768 28 nscd /usr/sbin/nscd

root 3149 1 193727 0 cron /usr/sbin/cron

|

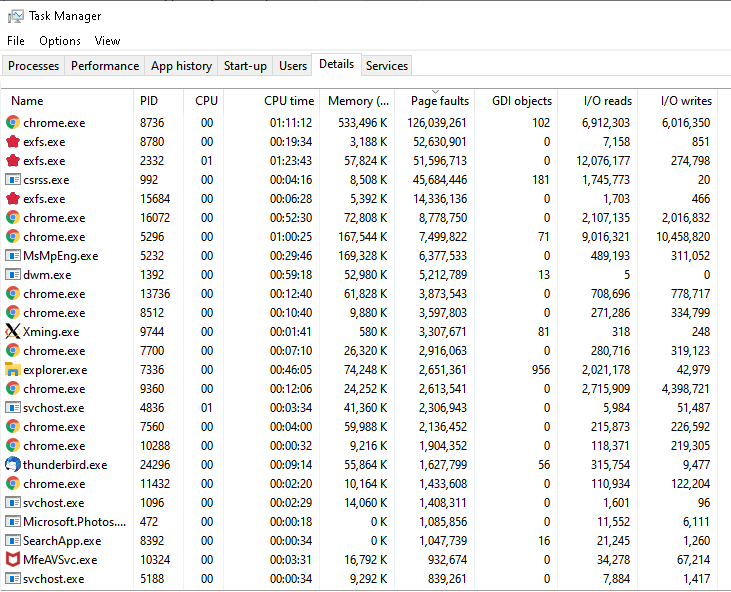

On

Windows we can look at combined page faults

using Windows Task Manager:

Note "Page faults" column on

Windows 10 Task Manager.

"Page faults" here combines both hard and soft page faults.

Where to swap to?

Swap to an area of disk dedicated to swapping.

Maybe entirely separate to file system.

On UNIX/Linux, swap to a partition that has no file system

on it.

User doesn't have to see it.

Doesn't have file names.

Not addressable by user.

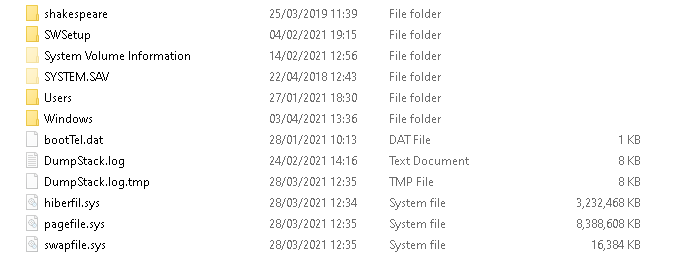

On Windows, swap to the named file

C:\pagefile.sys

Might move this onto its own partition.

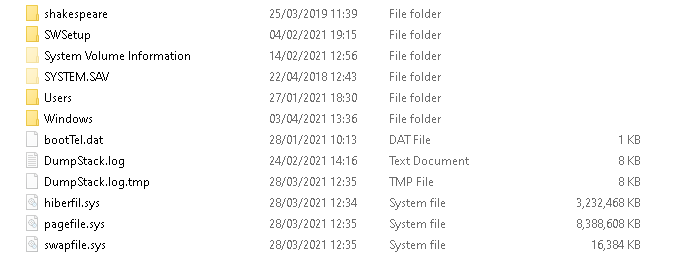

Windows 10 - File Explorer - C drive.

View - Show Hidden items

Then can see

C:\pagefile.sys

(size here 8 G).

Hibernation

Another form of dumping memory to disk is

hibernation.

Power down with programs open.

Write dump of memory to disk.

When power up, read image of memory back from disk.