Deep learning

There has been a massive growth of neural networks after 2005.

- There were issues with scaling:

- Modern neural networks have many hidden layers (could be something like 20 layers).

- Google Brain

has implemented networks with 1 billion weights.

- GPT-4 from OpenAI is said to have

over 1 trillion parameters.

- Deep learning

New approaches

A series of new approaches to fixing memory,

credit assignment, weight initialisation,

more zero-output nodes,

GPUs for parallel hardware,

and other modifications

led to "deep neural networks".

- Long short-term memory (LSTM).

Modification to back-prop

allows recurrent networks to scale to long sequences.

Breakthrough paper for deep neural networks:

- "A fast learning algorithm for deep belief nets".

Hinton, G. E., Osindero, S., & Teh, Y. W. (2006).

Neural computation, 18(7), 1527-1554.

Weights are initialized by training in a certain way rather than randomly.

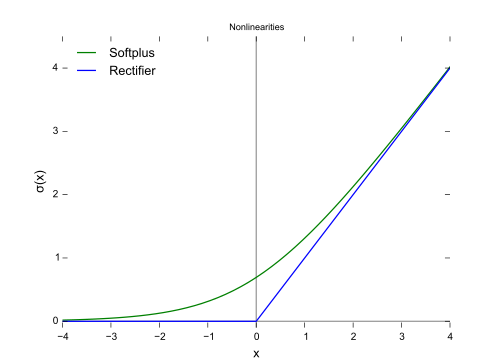

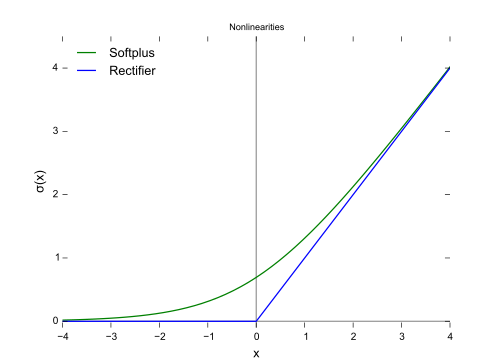

Deep learning discovered issues in using the

Sigmoid function

and other continuous functions

as the activation function.

It has been discovered that a much simpler activation function,

the

Rectifier function,

has important properties for deep neural networks.

The Rectifier function is:

f(x) = max(0,x)

The "Rectifier" activation function (blue).

From

here.

Properties:

- Slope is 0 below x=0.

- Not differentiable at point x=0

- Slope is constant for x above 0

Impact on neural network learning:

- Using this activation function vastly increases the number of nodes outputting zero for a given input.

Meaning these nodes can be entirely ignored by the next layer

(as opposed to a system where almost all nodes are in play, even if only by a small amount).

-

This leads to what is called "sparse representations"

and makes very large networks computationally practical.

- It is similar to "separating the inputs".

- It is much faster to calculate than sigmoid.

Very important for scaling.

Clearly a neural network maps perfectly to

parallel hardware.

It consists almost

entirely of simple calculations that could be done

in parallel, with a CPU at each node.

It is very wasteful to implement a neural network

on serial hardware.

Modern computers already have massively parallel systems for doing simple calculations:

GPUs.

So implementing neural networks on GPUs

became an important part of Deep Learning.

- AI and Compute.

2018 post about the growth in AI computing power,

driven by parallelisation on GPUs.

Other hardware

Neural networks in JS on GPU

- WebGL is for JavaScript graphics in the web browser.

It allows intensive graphics calculations to be done by the GPU.

- These calculations can be any calculations, e.g. for neural networks, not actually graphics.

- There are JS libraries to do neural networks calculations in JS in the browser

using the GPU for speed.

- Example:

tensorflow.js.

Use the GPU "backend"

to get performance maybe 100 times faster than the plain CPU "backend".

Click to run World:

canvas webgl at

Ancient Brain.

- This World shows a bit of what coding on GPU is like.

- This World has no graphics library (like P5 or Three.js).

- It does raw WebGL coding.

- This involves your JS program constructing a 2nd program,

a "shader program", written in the

GLSL language

(which looks a bit like the C language).

- Your JS then compiles the 2nd program and sends the compiled version

to the GPU for execution.

See lines like:

gl.compileShader ( vs );

- The 2nd program can actually do arbitrary calculations, not just graphics.

Click to run World:

Recognise any image at

Ancient Brain.

Opens in new window.

- How to use:

- Upload any image to Ancient Brain.

- Enter URL of image and see if program can recognise it.

- Here are some images to try:

- This uses the

ML5

AI JavaScript library.

- That link explains how the AI model is loaded.

- ml5.imageClassifier

uses a neural network to classify

an image using a pre-trained model.

-

The pre-trained model,

"MobileNet", was trained on a sample of a database of approximately 15 million images.

- "MobileNet" is size a bit over 10 M and is actually downloaded to the client

(via CORS)

when the page runs.

Nobel Prizes for AI

Showing the remarkable cross-subject impact of AI.

The brain has 100 billion neurons,

each with up to 15,000 connections with other neurons.

(Actually these figures include the entire nervous system,

distributed over the body,

which can be seen as an extension of the brain).

The adult brain of H.Sapiens

is the most complex known object in the universe.

Perhaps the most complex object that has ever existed.

One brain is far more complex than the entire world telephone system / Internet

(which has smaller number of nodes,

and much less connectivity).

If we considered each neuron as

roughly the equivalent of a simple CPU with 100 k of memory,

then we have 100 billion CPUs with

10,000 terabytes of memory,

all working in parallel and massively interconnected

with hundreds of trillions of connections.

It is not surprising that the brain is so complex

and at the same time consciousness and intelligence are mysterious.

What would be surprising would be if the brain

was a simple object.

- 1999 estimate:

- Ray Kurzweil,

The Age of Spiritual Machines, 1999, Ch.6,

argues that all of the world's computers plus the Internet

can

now match the computational capacity

(in terms of calculations per second)

of a single human brain.

- Internet - say

500 million machines times an

average of say 50 million calculations per second

= 25,000 trillion calculations per second.

- A single brain - 100 billion neurons times an average 1000 connections each,

times 200 calculations per second

= 20,000 trillion calculations per second.

-

Obviously the Internet's computers are not all wired up

to act as a single machine

- but they could be, in theory.

-

However, the question of very sparse connectivity still

puts the Internet behind a single brain.

- Still, it is encouraging

what humanity has built.

- 2008 estimate:

-

Kevin Kelly, Wired, July 2008

compares

the entire planet of computers, phones, cameras, etc. to a single human brain.

-

Only on connectivity can the brain now compete, according to his numbers.

(Apart from the fact that the world's computers and phones are not all wired up

to act as a single machine.)

- List of animals by number of neurons

- Human - 86 billion neurons

- Elephant - 257 billion neurons

-

"If the human mind was simple enough to understand, we'd be too simple to understand it."

- IBM scientist Emerson Pugh.