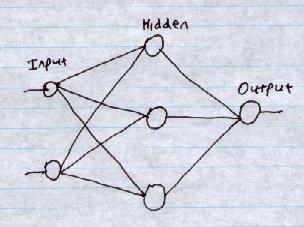

That is, a network with multiple layers of links. This involves what are called "hidden" nodes. This is nothing to do with security. This just means they are not "visible" from the Input or Output sides. Rather they are inside the network somewhere.

Multi-layer Neural Networks allow much more complex classifications.

Consider the network:

The 3 hidden nodes each draw a line and fire if the input point is on one side of the line.

The output node could be a 3-dimensional AND gate - fire if all 3 hidden nodes fire.

3 inputs. Let the 3 weights be 1.

Then what threshold to implement an AND gate?

What threshold to implement an OR gate?

In visual terms, the AND gate is separating the point

(1,1,1)from the points:

(0,0,0), (0,1,0), (0,0,1), (0,1,1), (1,0,0), (1,1,0), (1,0,1)

Imagine a 3-d cube defined by these points. The 3-dimensional AND gate perceptron implements a 2d plane to separate the corner point (1,1,1) from the other points in the cube.

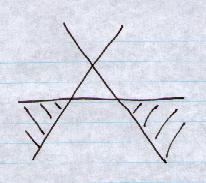

Construct a triangular area from 3 intersecting lines in the 2-dimensional plane.

To only fire when the point is in one of the 2 disjoint areas:

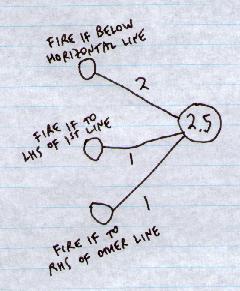

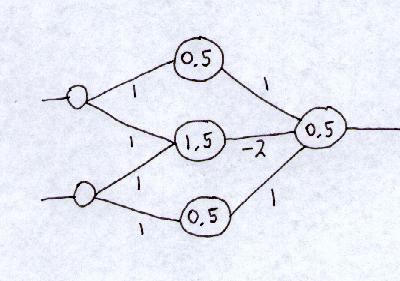

This hand-designed network will also do the job. (Just Hidden and Output layers shown. Weights shown on connections. Thresholds circled on nodes.):

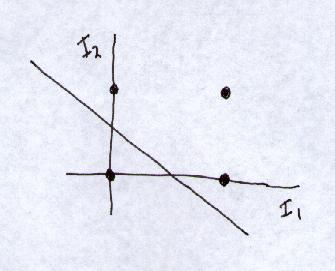

A 2-layer network can classify points inside any n arbitrary lines

(n hidden units plus an AND function).

i.e. It can classify:

To classify a concave polygon (e.g. a concave star-shaped polygon), compose it out of adjacent disjoint convex shapes and an OR function. A 3-layer network can do this.

A 3-layer network can classify any number of disjoint convex or concave shapes. Use 2-layer networks to classify each convex region to any level of granularity required (just add more lines, and more disjoint areas), and an OR gate.

Then, like the bed/table/chair network above, we can have a net that fires one output for one complex shape, another output for another arbitrary complex shape.

And we can do this with shapes in n dimensions, not just 2 or 3.

This hand-designed network will also do the job:

2 connections in first layer not shown (weight = 0).

We have multiple divisions.

Basically, we use the 1.5 node to divide (1,1) from the others.

We use the 0.5 nodes to split off (0,0) from the others.

And then we combine the outputs to split off (1,1) from

(1,0) and (0,1).

Question - How did we design the XOR network?

Answer - We don't want to. Neural networks wouldn't be popular if you had to.

We want to learn these weights.

We want an algorithm where we can repeatedly present the network

with exemplars:

Input 0 0 Output 0 Input 1 0 Output 1 Input 0 1 Output 1 Input 1 1 Output 0and it will learn those weights and thresholds.

The bit in yellow is the hard part.