OS and hardware

The fundamental job of an OS is to make the hardware usable for programs.

To do this, it needs to understand the properties of its different forms of storage

(in particular the access speeds and volumes).

This is the

"memory hierarchy"

(see below).

First we consider what is a program anyway, and how can a program be implemented.

What is a Program?

A list of instructions.

A precise definition of what is to be done,

as opposed to an English language description of what is to be done.

A precise description is an algorithm,

a recipe that can be followed blindly by a machine.

A list of instructions to be executed one by one

by a machine that has no memory of past instructions

and no knowledge of future instructions.

There are many processes that we observe in nature

(animal vision, language use, consciousness)

and invent in culture (calculating prime numbers, sorting a list).

Some we have converted to algorithms.

Some remain in the domain of an English language description.

The

Church-Turing thesis

claims that

"Any well-defined process can be written as an algorithm".

How is this Program, this list of instructions, to be encoded?

What (if any) is the difference between the machine

and the program?

Many possibilities:

- Hardware (mechanical/electronic)

is specially constructed to execute the algorithm.

All you do is turn the machine on.

e.g. Searle's "Chinese Room" thought experiment.

(Hardware does not have to be general-purpose.

Hardware can encode any algorithm at all.)

- Hardware is specially constructed to execute the algorithm,

but there is some input data which is allowed to vary.

This input data is read

at run-time by the machine.

e.g. Lots of machinery in factories and transport,

calculators,

clocks,

(old) watches,

(old) mobile phones.

e.g. Many computer networking algorithms in routers

(such as error-detection and error-correction)

are done in hardware since they are run billions of times.

- Hardware is a general-purpose device,

and the program, as well as the input data, is read

at run-time by the machine.

e.g. Every normal computer.

A general-purpose device implements

a number of simple, low-level hardware instructions

(the instruction set)

that can combine (perhaps in the millions) to run any algorithm.

Human programmer might write program in the hardware instructions themselves.

Or might write program in High Level Language.

Compiler translates this into low-level instructions

for the

particular hardware.

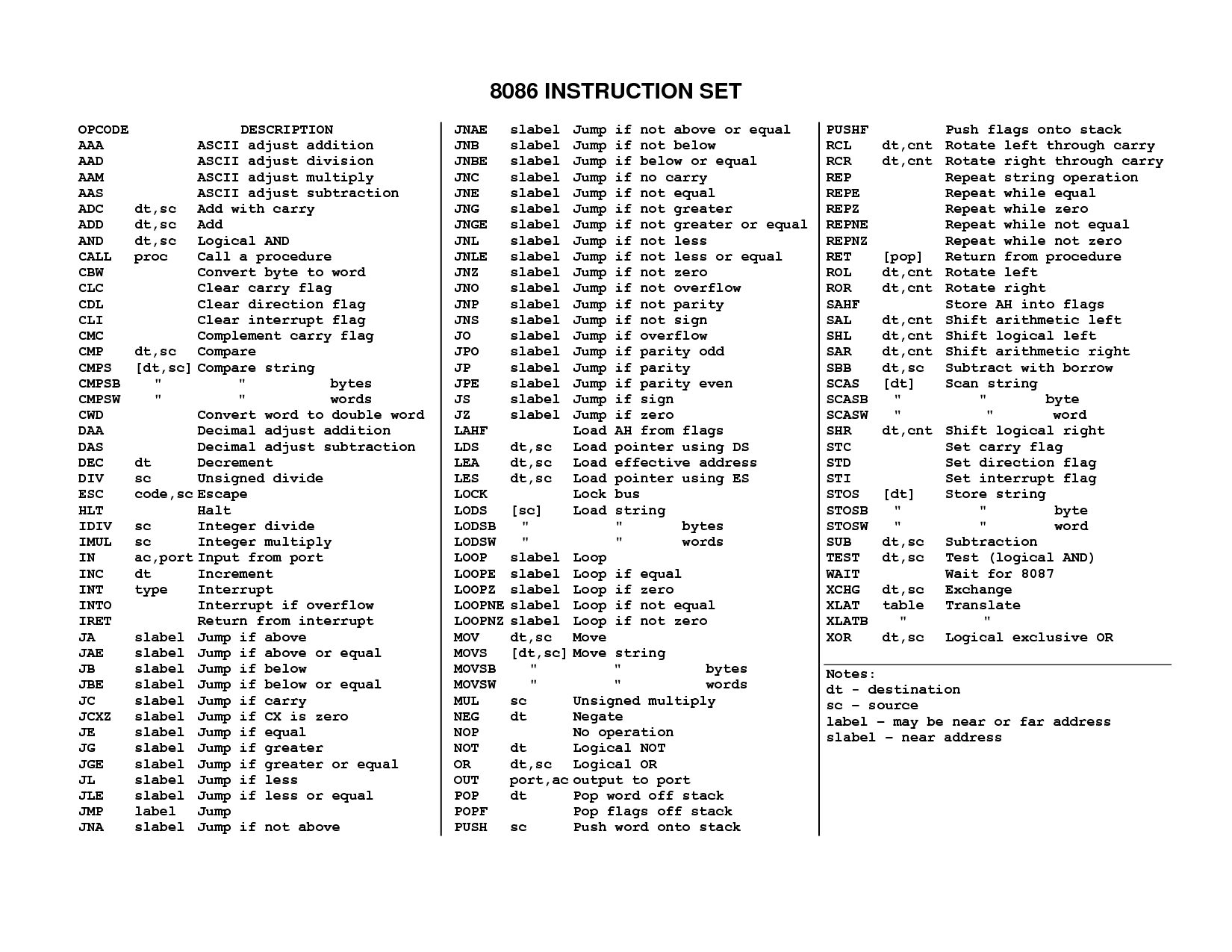

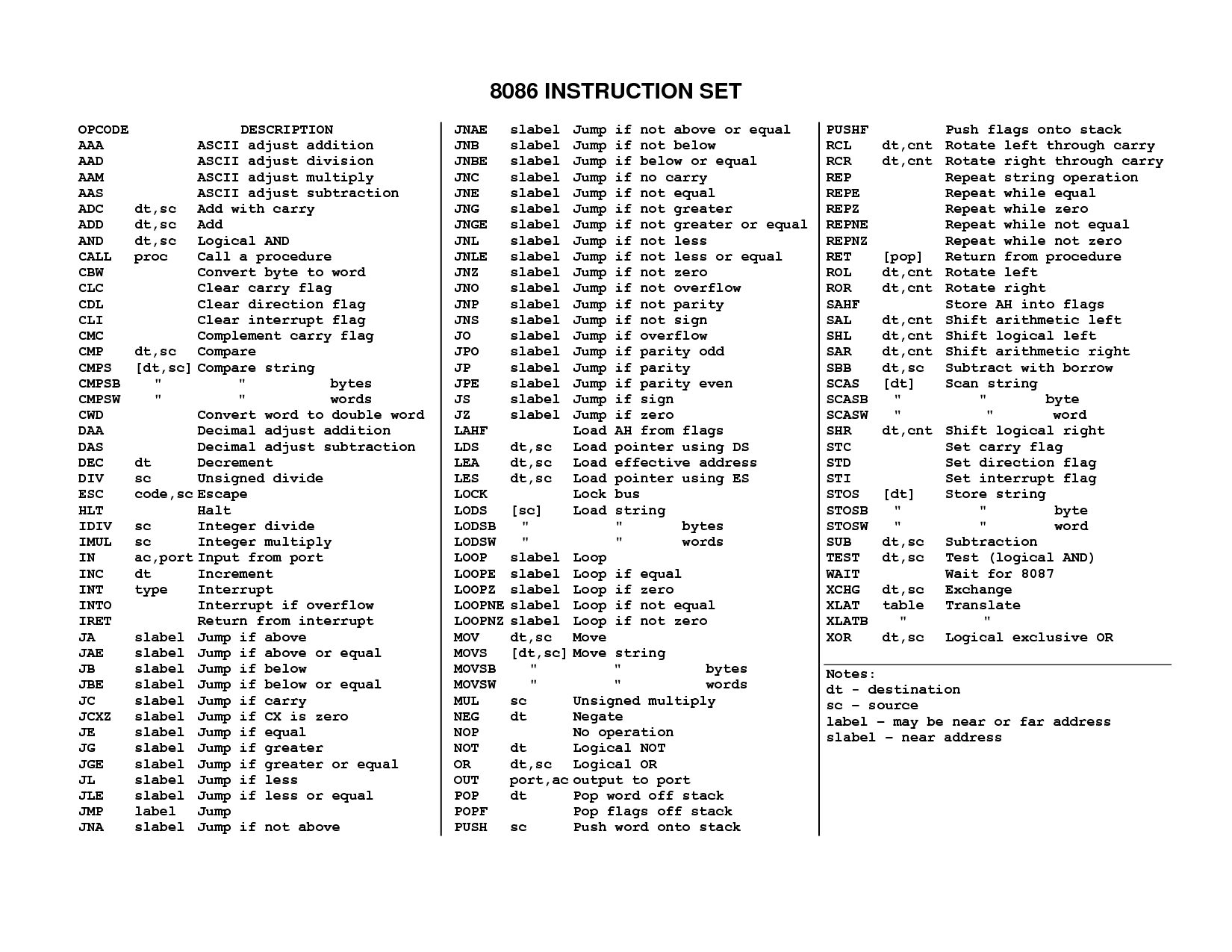

The complete Intel

x86 instruction set

(the start of the popular x86 CPU family).

For full reference guide, see

Intel manuals

for the

x86-64

architecture.

If you write direct in the low-level instructions, program could be much more efficient.

But you can only run the program on that particular hardware.

If you write in a HLL,

the same program can be recompiled

into the low-level instruction set of a different machine,

which is an automated process,

and much easier than having to re-write the program from scratch.

One HLL instruction like

x := x+y+5

may translate into

many low-level instructions:

find the memory location represented by "x"

read from memory into a register

do the same with "y" into another register

carry out various arithmetic operations

retrieve the results from some further register

write back into a memory location

CISC v RISC

There is a body of theory,

Computability theory,

showing what set of

low-level instructions

you need to be able to run any algorithm.

We can have pressure to:

- Increase the number of hardware instructions

- CISC model.

Observe the system in operation.

Anything done many times gets its own dedicated

instruction in hardware.

(This is the broad history of desktop Operating Systems).

Example:

Intel x86 family.

- Decrease the number of hardware instructions

- RISC model.

Simpler CPU runs faster.

May not matter if executes higher number of low-level instructions

if runs much faster.

Also lower power needs, less heat. Suitable for mobile, portable devices.

Examples:

ARM architecture.

As Operating Systems have evolved over the years,

they constantly redefine the boundary

between what should be hardware and what should be software.

We have different types of

Computer memory.

Permanent v. Temporary.

- Program is kept until needed on some

permanent (or "non-volatile")

medium (hard disk, flash drive, DVD,

backup tape).

"Permanent" means retains data after power off.

- To run the program, it is loaded into some temporary (or "volatile")

but faster medium (RAM).

Temporary data structures and variables are created,

and worked with, in this

temporary medium.

- In fact the CPU may not work directly with this medium,

but require that for each instruction,

data is read from RAM and loaded temporarily

into an even faster, volatile medium (registers).

e.g. To implement X := X+1,

where program variable X is stored at RAM location 100:

MOV AX, [100]

INC AX

MOV [100], AX

- The results of the program are output either to some temporary

or permanent medium.

- When the program terminates, its copy (the instructions) in the temporary medium

is lost, along with all of its temporary data and variables.

The long-term copy survives however, on the permanent medium

it was originally read from.

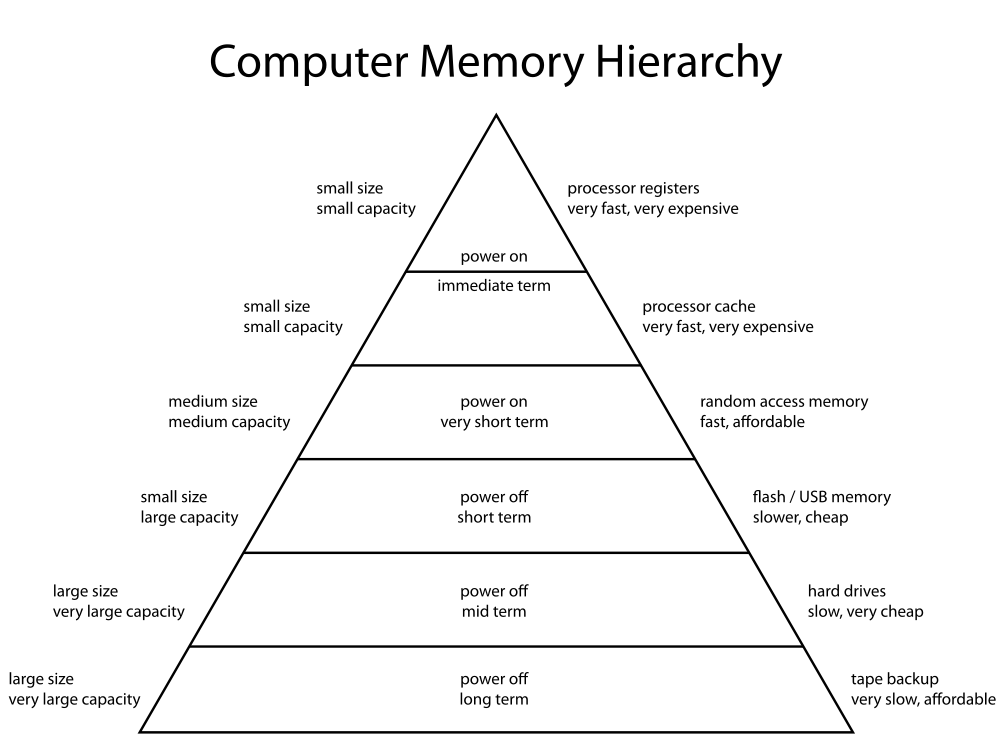

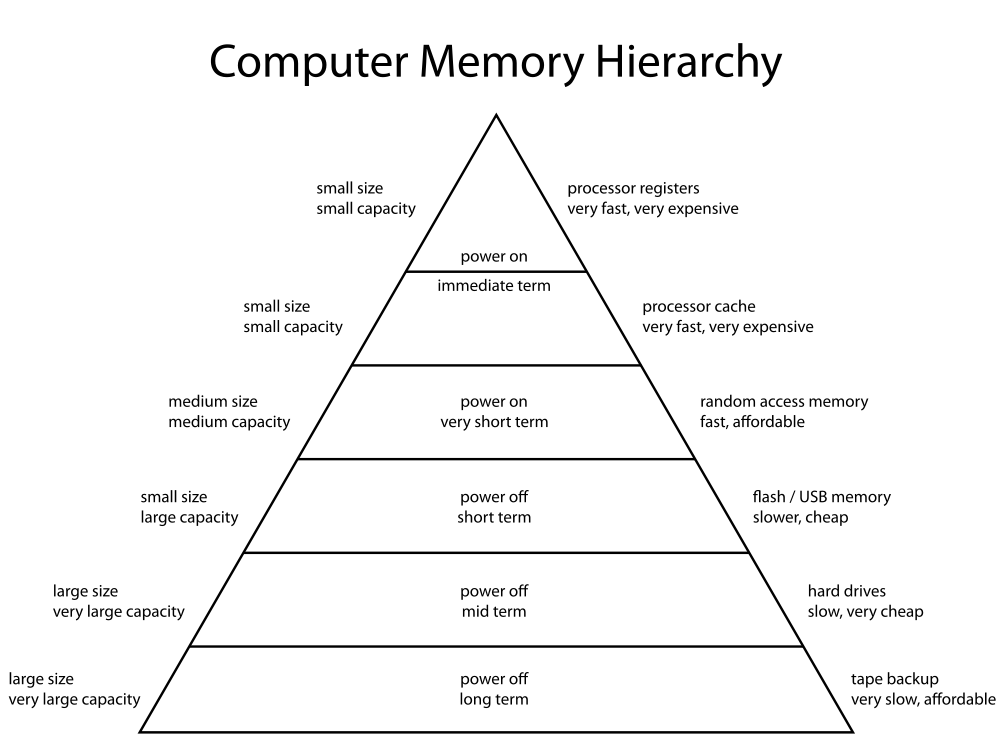

The

memory hierarchy

in computer architecture.

Credit

here.

- "Memory" =

RAM.

An

unstructured collection of locations, each of which

is read-write, randomly-accessible.

= "working memory"

= the fast, temporary medium that we actually run programs in.

Reset every time we restart the computer.

- "Disk" =

Hard disk (moving parts),

Solid state drive (flash memory, see below),

USB flash drive,

etc.

The permanent (non-volatile) medium.

A more structured space than memory,

formatted with a file system in place,

where different zones of the disk have names

and are separated from each other.

The permanent medium that we store programs and data files in.

-

"Read-only" memory

needed for "bootstrapping" code:

- There has always been a need for some

"Read-only" (or at least hard-to-rewrite) non-volatile memory

built-in to the computer

to hold the basic "bootstrapping" code:

(the basic code to load an operating system, find devices).

-

ROM

- Read-only memory - used for this.

-

Some forms of ROM can only be written once.

EEPROM

can be repeatedly re-written.

Flash memory

(developed from EEPROM) used for bootstrapping now.

Flash memory can also be used as the main disk.

You can format and structure a flash memory space,

and keep a file system on it.

See solid-state drives

and

USB flash drives.

Up and down the memory hierarchy: Higher speed - Lower volume

A typical computer:

MacBrook Pro.

Spec (highest values):

4 M cache memory.

8 G RAM memory.

1 T disk.

Shows the memory hierarchy of speed and volume.

The multi-tier model is a practical necessity, not a mathematical necessity

The multi-tier model is necessary to make machines work in

practice,

with the storage hardware that

exists.

Mathematically, a multi-tier system is not needed to run a program.

Consider the following.

Can we not use RAM instead of registers

- Can we not just operate on the RAM directly.

No registers needed.

- To do x := x + 1 where x is in RAM location 100, we just do:

INC [100]

- Mathematician says fine.

Engineer says we have no machines to do this.

The circuitry

does not exist to do arithmetic and logical operations

on RAM.

Only registers have this circuitry.

-

RAM is designed just as a medium to store data and return data.

Adding this circuitry to every RAM location

would be a massive overhead, which is why the current, multi-tier

architecture has evolved.

- It is quicker to copy data from G of RAM into a limited set of registers

than to try some architecture where every location in G of RAM can be operated on.

If disk was as fast as RAM

-

If disk was as fast as RAM,

then no need to load instructions into RAM from disk to be run.

Run them direct from disk.

-

This is not likely.

It seems likely though

that a volatile medium will always be faster

than a permanent one.

- However, disk can be used an overflow of RAM:

Paging and Swapping.

Thought experiment

- The above is a thought experiment,

to think about the possibilities for different architectures.

-

For the history of computers,

we needed all the speed we could get,

which is why these complex, multi-tiered

machines have evolved.

-

Mathematically,

this multi-tier system is not necessary.

Only a single medium is needed.

-

From the engineering point of view,

it is doubtful if a single-tier system will ever

come into existence, for the reasons above.

-

But it is useful to think about the possibilities

for different architectures,

especially when later we consider

using disk as an overflow of memory,

and memory as a cache for disk.

-

Machine Dreams, Tom Simonite,

April 21, 2015.

Article

on "The Machine",

a

Hewlett-Packard plan to get rid of the multi-tier system.

"The Machine is designed to [scrap] the distinction between storage and memory. A single large store of memory based on HP's memristors will both hold data and make it available for the processor.

Combining memory and storage isn't a new idea,

but there hasn't yet been a nonvolatile memory technology fast enough to make it practical".